You may remember this topic

where I installed a disk cage and card to allow hotplugged SATA disks.

I have only now got around to doing some comparisons

I first did a backup of my MX23 home directory to a Seagate 1Tb USB drive on a usb3.0 port

rsync -aAXvH /home/nevj/ /media/nevj/Linux-external/mx23home

sent 17,830,349,831 bytes received 475,175 bytes 36,131,357.66 bytes/sec

total size is 18,476,704,353 speedup is 1.04

real 8m12.785s

user 0m24.130s

sys 1m13.611s

Then I rsynced the same directory to a 1Tb SATA HDD in my hotplug cage

rsync -aAXvH /home/nevj/ /media/nevj/Backup1/mx23home

sent 18,481,656,815 bytes received 533,272 bytes 107,143,130.94 bytes/sec

total size is 18,476,869,580 speedup is 1.00

real 2m52.456s

user 0m26.847s

sys 1m16.254s

We can see that the removable SATA disk is faster by about 3x, as far as real time is concerned, but the user and system times are the same.

That is what I was trying to achieve, so quite satisfied so far. ( the home directory size increased slightly for the second run… I was using the browser)

That MX home partition was 18Gb and a mix of lots of small files and a few large iso files from the Download directory.

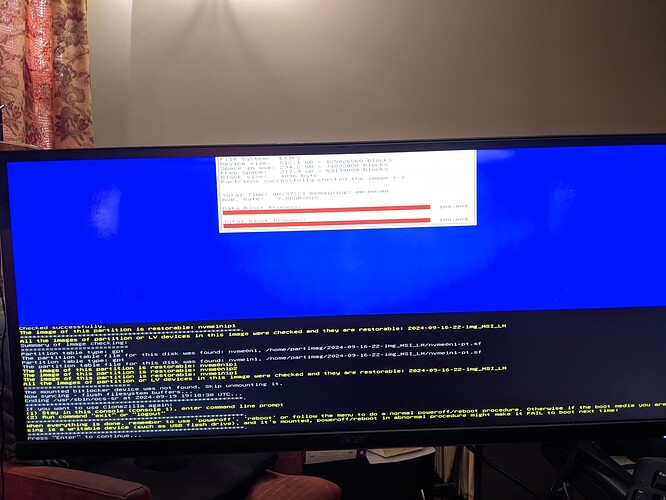

I plan to test rsync further with a directory which is all large files, and I plan to test full Clonezilla image backups which have been taking hours with my USB drive.