So in another post, I mentioned about how I’m working on writing a book, and how I’m using gitlab along with Visual Studio Code for that purpose. I’m a big proponent of self hosting everything, so of course I self hosted Gitlab CE edition.

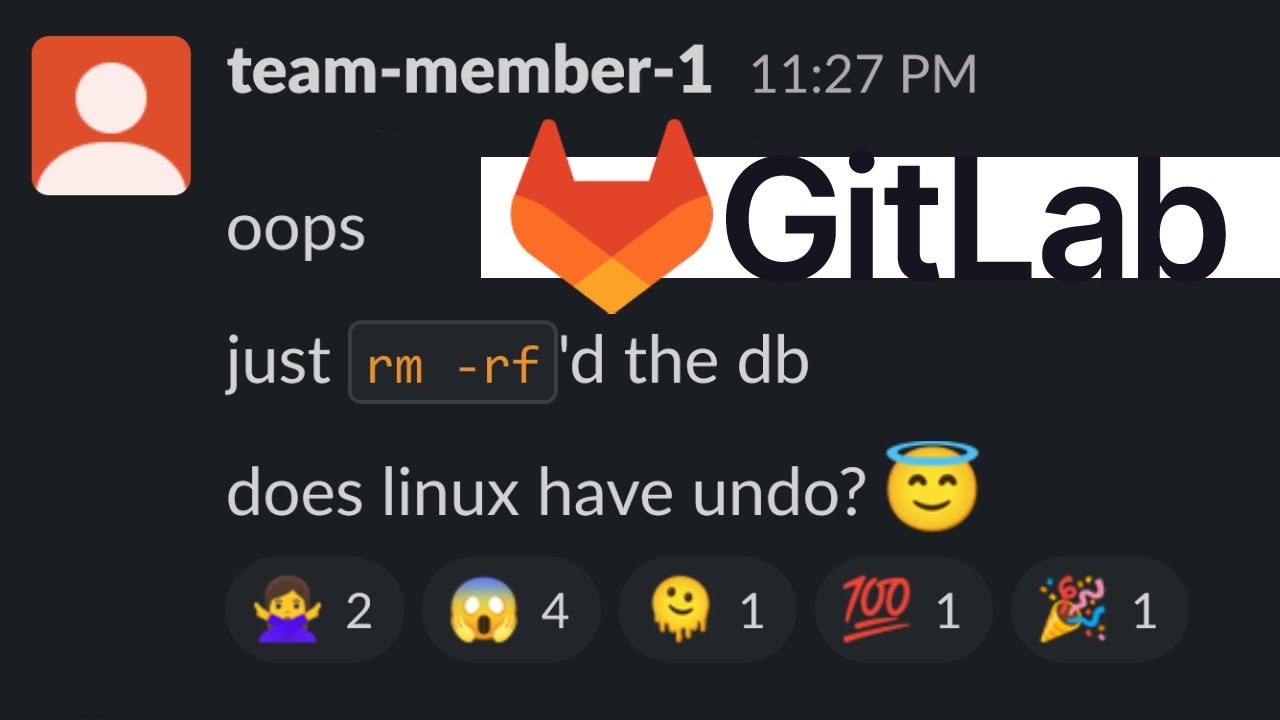

Gitlab uses PostgreSQL for it’s database back-end, and I get fascinated with the strangest things, so the latest thing that’s been fascinating me is the gitlab data loss incident that happened back in 2017. Basically the long and the short of it is when you’re in two SSH windows, make sure you run the rm -rf command you want to run on the right server, and make sure the backups you think you have actually exist and are working… or you’ll end up like they did back then. I think I posted a video on that a while back with the comment “this makes me want to run and test my backups” … I should see if I can find it.

I decided to replicate their setup, so I installed PostgreSQL on the VM I had gitlab installed on, moved all production over to that new database, and then installed a replica database on a Linode and set up a master/slave configuration. So as long as I have working backups, my book should be delete-proof. lol

I’m going to write an article soon on how to self host gitlab, and I’d love to write an article on how to set up a master/replica database in PostgreSQL, but the tutorials that are out there are really good, so I’d need to come up with a way to make mine superior in some way before I wrote that kind of thing…

I feel like a lot of DBAs are cursed, because whenever a big data loss incident happens, they’re usually at the center of it. IDK if they have backup administrators, or are they responsible for their own backups, but … I can tell you if I get a job doing that I’m going to be a complete bear about backups… lol, we’re running alert force drills at that place if I’m a DBA. lol