Utilities for managing .csv files

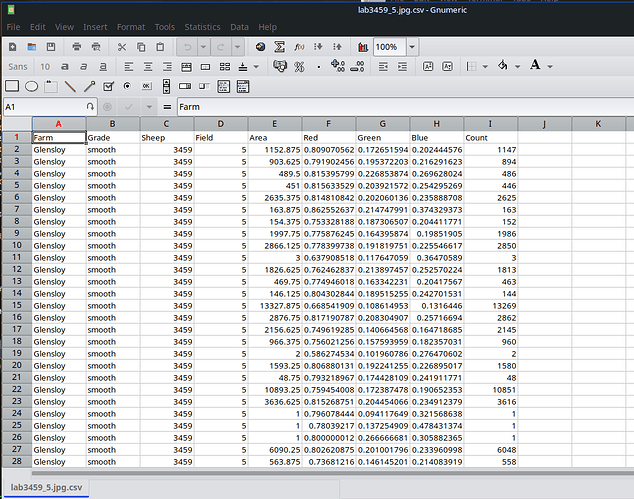

I was processing some image data recently. It was in the form of .csv files, which have data values separated by commas. They look like this

Farm,Grade,Sheep,Field,Area,Red,Green,Blue,Count

Glensloy,smooth,3465,5,1.0,0.7843137383460999,0.5764706134796143,0.5137255191802979,1

Glensloy,smooth,3465,5,23.375,0.7505541495654894,0.18942882217790769,0.25200341577115265,23

Glensloy,smooth,3465,5,16.25,0.8262255005538464,0.21200980711728334,0.27990197017788887,16

Glensloy,smooth,3465,5,23.125,0.7923351282423193,0.22834225337613712,0.3222816484895619,22

....

They usually start with a line of field headings, then follow lines of data fields.

I found that .csv files do not process readily with normal Linux utilities, but I discovered two packages which are specific for processing .csv files. They are called csvkit and miller.

Installation

The packages csvkit and miller are available in Debian based and Arch based distros.

Lets install them in my MX

apt install csvkit csvkit-doc

apt install miller

Simple. What you get is a whole set of new commands specifically for .csv files

$ man -k csv

csv2rec (1) - csv to rec converter

csvclean (1) - manual page for csvclean 1.0.7

csvcut (1) - manual page for csvcut 1.0.7

csvformat (1) - manual page for csvformat 1.0.7

csvgrep (1) - manual page for csvgrep 1.0.7

csvjoin (1) - manual page for csvjoin 1.0.7

csvjson (1) - manual page for csvjson 1.0.7

csvlook (1) - manual page for csvlook 1.0.7

csvpy (1) - manual page for csvpy 1.0.7

csvsort (1) - manual page for csvsort 1.0.7

csvsql (1) - manual page for csvsql 1.0.7

csvstack (1) - manual page for csvstack 1.0.7

csvstat (1) - manual page for csvstat 1.0.7

in2csv (1) - manual page for in2csv 1.0.7

mlr (1) - - like awk, sed, cut, join, and sort for name-indexed data such as CSV and t...

pedigree (1) - create a TeX file for pedigree from a csv file

rec2csv (1) - rec to csv converter

sql2csv (1) - manual page for sql2csv 1.0.7

Text::CSV (3pm) - comma-separated values manipulator (using XS or PurePerl)

Text::CSV_PP (3pm) - Text::CSV_XS compatible pure-Perl module

Text::CSV_XS (3pm) - comma-separated values manipulation routines

mlr comes from the miller package, the others ar efrom csvkit. mlr provides subcommands emulating awk,sed,cut,join,sort for .csv files ( and also JSON).

The csvkit commands

There is no general man page for csvkit. There are individualman pages for the utilities listed above… csv2rec, csvvlean, csvcut, csvformat, csvgrep, csvjoin, csvjson, csvlook, csvpy, csvsort, csvsql, csvstack, csvstat, in2csv, pedigree, rec2csv.

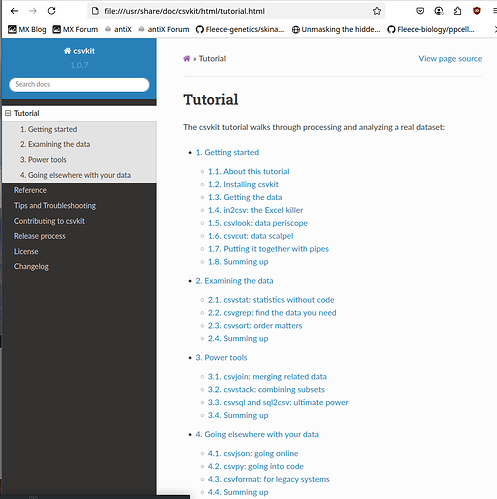

There is also an .html document installed by the package csvkit-doc. You can find it here

file:///usr/share/doc/csvkit/html/index.html

It is a comprehensive document, and includes a tutorial

There is no point in my duplicating it here

The utility I was originally interested in was csvstack which will concatenate .csv files withut duplicating the heading line.

csvstack *.csv > combinedfile

I also noticed csvstat which emulates whatthe R summary() function does

$ csvcut -c Area expt1.csv | csvstat

/usr/lib/python3/dist-packages/agate/table/from_csv.py:74: RuntimeWarning: Error sniffing CSV dialect: Could not determine delimiter

1. "Area"

Type of data: Number

Contains null values: False

Unique values: 21937

Smallest value: 1

Largest value: 199,468.625

Sum: 88,354,619.5

Mean: 694.399

Median: 22.625

StDev: 2,679.849

Most common values: 1 (29493x)

2 (9181x)

3 (2491x)

3.125 (1983x)

4 (1290x)

Row count: 127239

Seems OK, not sure what the Python message means.

Read the document… it is comprehensive.

The ‘mlr’ commands

There is no .html document for `mlr’, but the man page is extensive and has examples.

There is a help facility

$ mlr help topics

Type 'mlr help {topic}' for any of the following:

Essentials:

mlr help topics

mlr help basic-examples

mlr help file-formats

Flags:

mlr help flags

mlr help list-separator-aliases

mlr help list-separator-regex-aliases

mlr help comments-in-data-flags

mlr help compressed-data-flags

mlr help csv/tsv-only-flags

mlr help file-format-flags

mlr help flatten-unflatten-flags

mlr help format-conversion-keystroke-saver-flags

mlr help json-only-flags

mlr help legacy-flags

mlr help miscellaneous-flags

mlr help output-colorization-flags

mlr help pprint-only-flags

mlr help profiling-flags

mlr help separator-flags

Verbs:

mlr help list-verbs

mlr help usage-verbs

mlr help verb

Functions:

mlr help list-functions

mlr help list-function-classes

mlr help list-functions-in-class

mlr help usage-functions

mlr help usage-functions-by-class

mlr help function

Keywords:

mlr help list-keywords

mlr help usage-keywords

mlr help keyword

Other:

mlr help auxents

mlr help mlrrc

mlr help output-colorization

mlr help type-arithmetic-info

Shorthands:

mlr -g = mlr help flags

mlr -l = mlr help list-verbs

mlr -L = mlr help usage-verbs

mlr -f = mlr help list-functions

mlr -F = mlr help usage-functions

mlr -k = mlr help list-keywords

mlr -K = mlr help usage-keywords

Lastly, 'mlr help ...' will search for your exact text '...' using the sources of

'mlr help flag', 'mlr help verb', 'mlr help function', and 'mlr help keyword'.

Use 'mlr help find ...' for approximate (substring) matches, e.g. 'mlr help find map'

for all things with "map" in their names.

The most important thing seems to be ‘verbs’… these are the commands, eg

mlr cut -f {field1,field2}

extracts filed1 and field2 from all records.

‘Cut’ is the verb.

So, read the man page. It has everything

Apps which use .csv files

R and Julia and Python can read and write .csv files.

R and Julia read them into DataFrames.

You can also read them into a spreadsheet

$ gnumeric lab3459_5.jpg.csv

Gnumeric is my favorite spreadsheet.

Some File Managers, when you ask to display a .csv file, will display it using a spreadsheet. I find that annoying. I need to see the raw file.

Conclusion

Anyone who manages any kind of data files could find these utilities useful.