Like comparing orange versus pear.

ARM is a completely different architecture.

Intel and AMD are competing manufacturers, but both produce CPU’s for the same architecture.

So they are the same on architecture, and CPU (complete) instruction set (mostly).

ARM on the other hand is a different architecture, it’s a low-powered RISC CPU, I think aimed for mobile devices. RISC stands for Reduced Instructions Set Computer.

Many produce ARM processors: Qualcomm, Broadcom, nVidia, Mediatek, Samsung, just to name a few.

Because of the reduced instruction set the internal logical circuits in the CPU can be much simpler - thus quicker, and the whole CPU can consume less energy, while working at a high speed.

On the other hand a CISC processor can have very complex instructions, like the REP things in intel ( REP/REPE/REPZ/REPNE/REPNZ — Repeat String Operation Prefix ), for this to do a RISC CPU has to be programmed with complete loop.

So in such cases CISC wins especially supporting higher level languages, like C.

Long story short:

ARM can consume much less energy

Intel (and AMD) can be more performant

I don’t have a VPN.

If you mean a static IP, no, it’s not needed. DDNS stands for dynamic DNS, and this the thing to workaround the changing dynamic IP of the home network.

When your home router connects to the internet, it gets an IP from your provider, but that’s changing, it may be different on every reconnect, and it may change from time to time. For example, without reconnecting I have a constant IP for about a week, but then I get another.

The DDNS provider lets you workaround this:

it stores your IP, and assigns a FQDN, and when you try to look it up, the DDNS providers name server responds with your homes current IP.

So you can reach your home always using an FQDN, like

sheilahome.freedns.org (just an example…)

Whenever your IP changes, it will be noticede by ddclient, and it does the work to update the IP at the DDNS provider.

This ddclient instance can run on your home server, or it may be included in your routers firmware. Having it outside the router gives more flexibility, because it’s possible to configure ddclient to any DDNS provider, while routers tend to have only DynDNS, or NoIP as preconfigured providers.

I choosed dynu.com because it allows to add aliases, TXT records, and such goodies, not only an A record.

Great, we use exactly that to keep contacts/calendars synced on Android.

On the computers (desktop/laptop) Thunderbird has native support since a recent upgrade, and Evolution (my choice ![]() ) has it too.

) has it too.

As I looked quickly at Etesync, it shows up as possibly self-hostable:

So if you start to run your own server, it would be a waste not to self-host such things ![]()

Definitely yes:

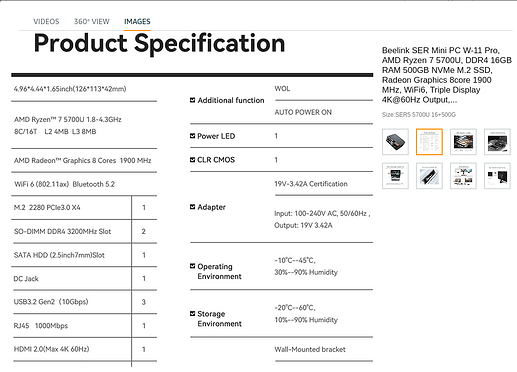

But this is for intel architecture.

If you move to an ARM based computer, first look for an Ubuntu-like-image meant for that board.

Like Ubuntu-Minimal for Odroid

https://wiki.odroid.com/getting_started/os_installation_guide#tab__odroid-c4hc4