There is an argument for occasional offsite backups.

I mostly put code stuff important to me on Github… it ends up in the Arctic Vault… that should be far enough offsite.

Personal stuff, I dont have an answer for today… it used to be CD’s in a fireproof safe.

LOL…yeah, that is exactly what I mean for now. Since I can now use those M-disks (1000 year data safety medium) with my new external DVD writer, I will just put all current/past media on those as my offsite backups. These will, in fact, be in a fireproof safe.

Sheila

So updating on the setup thus far:

Installed Fedora Server on Beelink (that took some doing since you need to tweak a few things) I did not set a root user/password, only a user as admin.

Setup Cockpit from LM browser to have a GUI interface on the server from my main machine.

Since the LM machine was the only machine showing up, needed to add the server host. That definitely took some doing. I spent over an hour trying to connect via hostname, ip address, adding firewall rules, etc. and finally just SSH’d into it and that was simple.

Once the SSH connection was in place, Cockpit connected via ssh://ID.

Now I will be opening the unit to add the new 2TB SSD. From there it will take some housekeeping & formatting of one of the external 5TB drives before I am ready to set it up next to my router via ethernet.

Updates will continue after that. And of course I expect I will need answers to various issues as they arise ![]()

Sheila Flanagan

Installed 2 TB internal SSD with single partition for now. As I do not want to run out of room for all the backups from individual machines, I will wait and see how much room is needed for this.

I had previously made a checklist of things to do on the new server when I was considering just using Fedora Workstation vs Server edition. They are:

Deactivate system sounds, mute mic

Turn off bluetooth

Turn of suspend, shutdown for power button

Regarding bluetooth, I ran across the following in the log:

Malformed MSFT vendor event: 0x02

Did some research and this is apparently a known bug with Intel AX-200 and later. I could spend time trying to resolve this, but I did not intend to use any bluetooth or wifi with the server: only ethernet and usb keyboard/mouse when needed. For now, I simply use Cockpit on my LM computer to do everything I need, including terminal commands.

In case this is something I need to resolve, I can include the output of:

lsusb | grep Bluetooth

uname -a

lscpu

But I believe for server usage, this is not necessary?

Thanks,

Sheila

Where I work, we’ve used OpenStack, VMWare ESXi, and Hyper-V. Was not a fan of OpenStack, but it was an old version running on hardware I also didn’t care for. The ESXi was my favorite. Hyper-V seems to work pretty well. The main problem is the hyperconverged method the company used for it and again it was on hardware I didn’t care for. We’re in the process of replacing Hyper-V with ESXi. It will be nice not to have to worry about which platform things are on.

Now the new worry is the licensing and costs for ESXi after the purchase by Broadcom. I’d be in favor of trying something like MicroCloud or LXD on Ubuntu maybe.

Read about LXD… it is like libvirt or virtmanager but with network capability.

I dont need the network capability, but it seems usable.

It is a snap… dont like that.

Should we start a new thread to discuss snap?

@pdecker I, for one, would like to see one. I don’t use them, and as @nevj stated, I don’t like them.

Sheila

New update on home server:

Found it consistently time consuming (for me) to keep searching CLI commands to do things on Fedora Server OS. After spending hours trying to accomplish simple things like adding folders to the internal Backup HDD I installed (permissions, whew!), etc., I gave up and installed Fedora Workstation 39 so I could have a GUI for such tasks. Guess I have been too long in Ubuntu terminal and other than dnf vs apt, and too spoiled/lazy from using GUI vs CLI (like when we had no choice back in DOS days…lol).

So now after getting my No-ip set up and moving onto the NAS part, I looked at TrueNAS. The only thing that bothers me is the non-ECC RAM in this hardware. From my research, the AMD with iGPU does not support ECC RAM and while old threads dismissed the necessity, newer advice seems to be “if you care about your data” and while I will have the original data (to date) on DVD medium so that even if it was damaged on the server, it is not lost. New data, I guess, will have to do the same with: occasional backups to DVD M-disc since I am not using true cloud for backups.

Probably @daniel.m.tripp can provide some insight, especially if he does not use ECC RAM. Do I need to worry about my setup not using this? I did see discussion on some boards providing so-called support via other names? JEDEC spec without ECC and another one, but this is beyond my limited understanding of RAM.

Thanks,

Sheila

Do you really find GUI easier than remembering commands?

I in my experience it is simpler to look up how to use a command than to try and follow some account or video about using a GUI. GUI use is hard to document properly. They are meant to be self documenting, but the design is not always up to it.

and

if it is something really critical, you should use script and commands, so you get a record of what you do. GUI keeps no record.

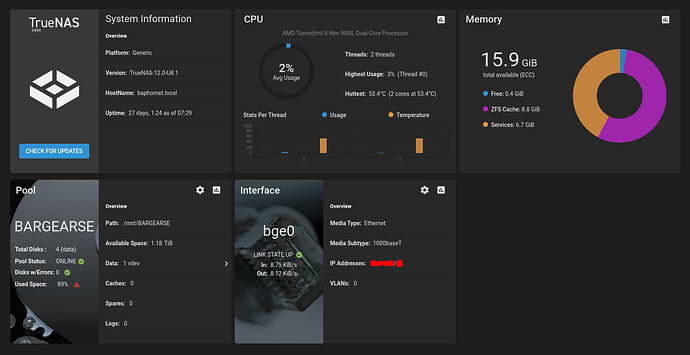

I have a “dedicated” machine for my NAS (TrueNAS / FreeNAS) - circa 2011 HP N40L Microserver, dual core AMD Turion CPU (amd64 / x86_64). Over the last 12 years (purchased December 2011) - it’s mostly run some variant of FreeNAS (I did have a Solaris based NAS running on it for a few months) and pretty much always ZFS.

Up until around 2021 - (i.e. 10 years) I just had 8 GB (2 x 4 GB) non-ECC DDR3 RAM in it and it was just fine.

In 2021 - I decided to upgrade to 16 GB, and ECC wasn’t that much extra - so - now I’m running 16 GB of ECC RAM in it.

TL;DR : I don’t really need it (ECC) - but what the hey! You probably don’t either!

I think I started it off, in 2011 with 4 x 1 TB spinning platters which lasted me about 2 years, then went to 4 x 1.5 TB WD Green spinners (a couple of which came from e-bay - and failed) so upgraded to 4 x 3 TB seagate spinners around 2016, then one of them failed - so I replaced it with a 4 TB WD Blue - then another seagate failed, so I replaced that with another 4 TB WD Blue - so I’m running 2 x 3 TB and 2 x 4 TB (but because of the nature of my RAID layout, the 4 TB drives were only used as 3 TB - and - RAID 5 with 4 members - you’re effectively only using 3 of them).

Late 2019 - I bought two more 4 TB WD Blue and incrementally installed them - i.e. fail one of the 3 TB drives, replace with 4 TB, resilver, rinse repeat - once ALL the shonky seagate drives were replaced with WD drives - I was running 4 x 4 TB with effectively about 11 TB of available space.

And that’s the current state - recently deleted a massive bunch of ISO images I don’t need and scored back a massive 0.5 TB (doesn’t sound like much - that’s like 500 movies!) :

x@baphomet ~ df -h /mnt/BARGEARSE

Filesystem Size Used Avail Capacity Mounted on

BARGEARSE 9.9T 8.8T 1.2T 88% /mnt/BARGEARSE

I reckon that’ll last me a couple more years at least.

As mentioned previously - I should probably “invest” in a spare power supply for my N40L… It’s been powered on 24x7 365 since late 2011…

I’m still unsure of your reasoning for running Fedora over some Debian / Ubuntu (or Mint) distro. ANY distro (even desktop) can act as a server.

I hate fiddling too much with NFS (relatively easy) and Samba (relatively a PITA) - and - TrueNAS takes care of the heavy lifting. TrueNAS is a *NIX, and I can get a shell (I use ZSH) for when I want to do stuff with the CLI… I do NOT do any configuration from the shell however - any systemwide config changes will be lost on reboot (I mostly do things like change permissions on files, do housekeeping etc).

It’s now running Plex as a FreeBSD plugin / jail and my daughter uses it from her Apple devices (ipad, iphone macbook) mostly for movies…

I can remember in the past, when I wanted to run a Linux machine as a “home server” - I did NFS trivially - but - installed WebMin to manage Samba as I LOATHE it’s config files. I’ve also used WebMin to manage bind (DNS server) on my home network - as it - like Samba - has HIDEOUSLY fiddly configuration files and niggly management (I’m a UNIX guy - but - gimme Microsoft Windows server DNS ANY day over fiddling with F–KING BIND databases!).

@nevj I think a lot of it was the whole directory tree layout and it kept saying my 2nd SSD was not mounted. But if I tried to mount it, it wanted to know where. Well, where indeed! I initially had the new drive formatted ext 4 and thought that was good enough. But then it needed a partition? Okay, well I did know to choose GPT, but now what? I just need some folders on that drive for the separate machine backups. But no matter what I tried, it would not make them correctly.

Example:

I want a folder /Acer Pop OS

Typing that, I get /Acer

Huh?

That’s the main thing that drove me nuts as using Nautlis, Dolphin or whatever GUI, I can just Make New Folder and Name it what I want…lol.

I even tried one of @Abishek’s tutorials on these things, but he was referring to the disk the OS was installed on and not an external brand new disk. So that is where I do not know how to get that set up in CLI.

And I do document the GUI in my Linux notebook along with all my commands I learn to implement. Otherwise, as you said, there is no record of what I did. I even output the terminal into those notes so that I can see any mistakes or error messages and how they were resolved.

I am determined to get a better grasp on the terminal commands and thought I had, mostly, in Ubuntu-based systems. Fedora is different in some ways, others only need one word substituted. But what I really need is to understand the setup of the hardware with the software and how to make it all work together. Maybe then I would not have had so much trouble trying to “mount” a new drive.

Thanks,

Sheila

Yay! I just wasn’t sure and was kinda miffed I didn’t know about this type of RAM before I bought my server hardware.

As in a power brick? or are you talking about UPS? Geez…another piece of hardware I did not consider. I know it’s not a biggie now, but it will be eventually.

I think I am bored…LOL. Having run all Ubuntu-based DEs (Kubuntu, Pop OS, LM) I was having issues with that piece of crap Surface Pro 7 that I had Pop on, so I installed Fedora. And whlile the desktop (Gnome) was more like Pop (Gnome), it used a different package system, and I thought, “Yes, I want to learn something different.” (I told you I was bored).

But let me say that I have not gotten so far in this thing that I can’t be persuaded to switch distros. My whole purpose in initially installing Fedora server was to “make” myself use the terminal more in order to become comfortable with the various commands needed to run a system. And I thought, “It’s not like I’m installing a bunch of apps and things I need a GUI for…” and it did have the browser interface via Cockpit, which was very nice. But still I had to go research parts of it that I later found did not apply to me (i.e., Join Domain). And that whole “mount” thing with the 2nd drive, that had me pulling my hair out and noticing I had spent 2 hours on that issue alone. So I talked myself into the compromise that I would still use Fedora for learning (I certainly won’t get much mileage on that SP7 install), but with a DE so I could get stuff done faster in setting up this home server.

For one, reading lots of Linux articles, it seems Wayland is on the way in to stay for most of Linux. And while I had to switch to “input-leap” for my barrier client-server setup, I chalked it up to: I learned something (albeit via GUI) and was able to make it work. So FINALLY, I can share my mouse/keyboard from LM to Pop to Fedora. ![]()

As I told @nevj , I probably need more understanding of the structure of the hardware-software things in CLI so that it makes logical sense (in my brain) on how/why you use a certain command string to get things done.

I thought Samba was supposed to be a simple file sharing drive on the network. But I only used it once, when I had a W11 install. I have downloaded the TrueNAS Core, but will not be installing/setting up till I understand more about what it does for me (like a GUI) and what exactly the “heavy lifting” is you are referring to.

Thanks,

Sheila

True if you have to manually edit zone files. Surely there is a better way these days?

Sorry - should have been more clear - I meant an ATX style power supply in case the one in the chassis fails - and it’s not a “generic” ATX P/S - it’s specific to this hardware… The server itself uses a stock PC / kettle cord power to the mains… At a pinch I guess I could hang a standard “off the shelf” ATX power supply out the back of the case - but it would look a bit ugly and clunky…

I still have one customer - that - yes - you have to edit zone files and “make” when you’re done - it’s a vast ugly leviathan piece of crap (partially on Solaris) and there’s only ONE person in this state who knows how it all hangs together! It’s gotta be one of the UGLIEST things I’ve seen in 10 years or more!

On Windows systems - yes. But running a dedicated Samba server on Linux can be tricky to get perfectly right. Me? I don’t really even need Samba on my TrueNAS system - as all my systems are Linux or MacOs and I can use NFS - but I keep Samba there - just in case - e.g. my daughter uses the Samba shares from her Mac, and I use them from my iPads (never found an NFS client for iPad).

It’s FreeBSD “UNIX” at the core - it doesn’t come with a GUI - it only ever boots to the console / CLI - but - what TrueNAS gives you is a FANTASTIC WebUI to configure just about everything - you just need to setup networking on the console (and it has some automated process that asks you - or prompts you at each step) - once you’ve got it on your LAN - you fire up the web front end : that sceenshot is the “front page” after you’ve logged into the TrueNAS web UI.

Oh yeah - and I think the installer prompts you to create a root password (you can create other accounts later in the web UI). Probably naughty / no-no - but - I log in as root to the web UI of my TrueNAS to manage it (I don’t login as root when I SSH to it however).

Note : TrueNAS needs AT LEAST two disks to install - the 1st disk is the boot disk, and the 2nd disk is the data / server / storage. In my case - my HP N40L has a single 2.5" 256 GB SSD to boot off, and 4 x 4 TB HDD’s setup as RAID5 (in ZFS “RAID5” is called RAIDZ1 - (you need a minimum of 3 disks to setup a RAIDZ1 pool ) for storage / sharing. TrueNAS actually recommends two disks to boot off as well - but I only have 5 SATA ports on this system (the N40L has a SAS backplane with 4 SATA ports of that backplane + a single SATA port for optional optical drive - I use that for my boot SSD) - with two disks - it would setup the boot drives as a mirror.

Here’s what the N40L looks like with the front door open :

Note : those disk caddies might look “hot swap” but they’re not! System needs to be powered down to remove or insert hard drives.

I just remembered a piece of hardware I had years ago before I got this HP Microserver : a PCI dual port SATA card (it’s ostensibly for RAID - but hardware RAID IS BAD BAD BAD!) - I could slot that into my N40L and run two SATA SSDs on it mirrored (I could also maybe even partition them - so half is the boot RAID, and the other half is for ZFS cache - but that’s getting highly technical - sorry ![]() ).

).

This just prompted me to have good look at my main storage filesystem on my NAS - I used to use it as the NAS backend for my wonky little VMware “Lab” - and there was 200+ GB of VMDK images on there - now gone :

✘ x@baphomet /mnt/BARGEARSE df -h /mnt/BARGEARSE

Filesystem Size Used Avail Capacity Mounted on

BARGEARSE 9.9T 8.6T 1.4T 86% /mnt/BARGEARSE

I reckon I can last on this for a couple more years easy - and will only upgrade to 8 TB (or higher) WD disks when any of these 4x4TB fail, and will replace them incrementally (note I won’t reap any benefit from a larger disk till EVERY disk in the ZFS pool is that size).

You have to make a mount point (on your internal disk) to mount it to.

A mount point is just a directory with nothing in it.

So you use mkdir to make mount point.

Traditionally people put mount points in /media or /mnt,

but you can put them anywhere in your filesystem.

OK lets do it

mkdir /mnt/nevj

now lets say we have an external disk sdb with a partition on it called sdb1 and lets say it has been given an ext4 filesystem. To mount it I do

mount -t ext4 /dev/sdb1 /mnt/nevj

Then when you look with df you should see /mnt/nevj listed.

You can , of course, mount it with the file manager, instead of with the mount statement. In that case the file manager will

automatically make a mount point… it will be in /media.

File manager mounts are user mounts. Mount statement mounts are superuser mounts.

Is that the sort of thing you are asking for?

Excuse me if I misread what you want.

Thanks, yes. But I did try /media, as I knew that’s where all my other external disks get mounted. But mkdir /media returned an error, so I figured there must be something about a new secondary disk that was different.

Further research then indicated I had to first create a partition (via fdisk). That I did not understand. On a primary system disk, of course we need the boot, efi, etc. on separate partitions. But this was just to be a data disk. I never partition a new USB drive, just format it, thus I thought it would be the same with a secondary internal SSD.

Then attempting that–there was partition type, “primary”, what other type is there? And while I could have just accepted defaults maybe from there, not having a clear understanding of disk structures made me afraid I might mess it up. And, of course, to auto mount I would need to edit fstab.

So after all of that and trying to create the folders with errors returned, I guess I just gave up and said I guess I will need to install a DE to speed this process up, since I know how to do it with a file manager.

So you mean doing the mount in CLI is a “statement” and “superuser” with specific rights? I looked at that and saw flags to use, like rw and auto, but did I still need to edit fstab even with auto?

After reading so many tutorials on all of that, I realized I do not understand all the structures enough to be able to point to a solution that applied to my situation and determine the correct one to use. I hope to correct that soon.

Sheila

It was probably already present.

You should use a subdir inside /media or /mnt., and give it some meaningful name.

Oh, I see. USB drives come preformatted… ie with partition(s) and a filesystem. Internal SSD’s are blank. They need a partition table, 1 or more partitions, and filesystems on each partition.

Fdisk is too much like hard work. Use gparted… you wont make mistakes with it.

If you use an msdos partition table, you get primary partitions and extended partitions

If you use a GPT partition table , all partitions are the same type… ie just partitions.

You should use GPT. Msdos partitioning has limits… I think it cant handle more than 2Tb disks.

So if you used msdos , go back and do it again with gpt.

Keep reading. You will conquer this. A DE will not help… you do need to understand

the interaction with hardware.

Okay, again my lack of understanding in disk structures shows here, but I have the internal 500 gb SSD and the secondary internal 2 TB SSD. Are you saying the program resides on the boot drive and then points to the second drive for storing the data? I mean, obviously, the boot disk has to have an OS. Or by saying

You mean TrueNAS is an OS? for the purpose of using it like a h/w NAS? If so, how would I use it on the same drive as the Linux OS boot drive? I would have to make one of the external HDDs a boot drive & the other one the data drive? If so, no wonder homeservers have so many drives.

Why? So that one can “mirror” the other? That is a safety net?

I don’t intend to setup RAID as I will only be using additional external HDDs for backing up the server & the internal data drive. Is that correct thinking?

Of course all of my media (movies/music) will also be on M-discs (in a fireproof safe) so that replaces my “offsite” copy so that I do not need a cloud. Reading up on hosting NextCloud, etc. seems “highly technical” to me.

Oh. I did not know you could do that. Thought internal power supply was “it.” So this would be a redundant PSU? Yours has been up so long and hasn’t failed yet, so may be a while before I need to worry about that? And as I understand, a failed PSU would not harm my data, just could not boot the machine until I replaced it; albeit, I don’t think I can do that in this Beelink SER5 Pro, or at least I did not see it when I opened it up, but I did not remove everything down to the motherboard in this stacked setup. If I ended up needing to upgrade the 16 gb RAM (or had it supported ECC), I would have.

That is what I should have said. Like Cockpit on Fedora Server, it is a browser GUI…lol. Although my lack of understanding makes me wonder why after removing Fedora server and replacing it with Fedora workstation I still have the same access in a browser. So Cockpit is not just for server OS?

Thanks for all the info, your input will clear up any gaps in my limited knowledge of these things so I can be sure how to proceed before I attempt a setup that will not work for me.

Sheila