See my topic

I have a directory structure for images as follows

dermis.collagen.images

├── Glensloy

│ ├── smooth

│ └── wrinkled

│ ├── between

│ └── on

└── Manton

├── smooth

└── wrinkled

├── between

└──on

2 farms called Glensloy and Manton, groups of smooth-skin and wrinkled-skin sheep, and within the wrinkled group samples taken either on a wrinkle or between wrinkles.

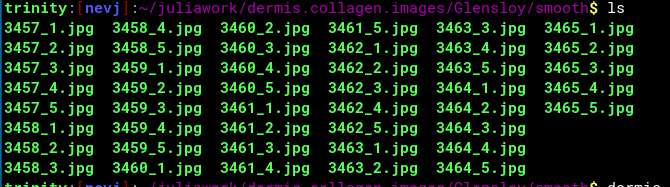

In each lower level directory there is a set of image files like this

The numbers like 3457 refer to individual sheep, and there are 5 microscope fields per sheep denoted by _1 to _5

I now make a small shell script

#!/bin/sh

for i in dermis.collagen.images/Glensloy/smooth/*

do

julia ./collagen1img.jl $i

done

which will process all the images in a directory, and the program collagen1img.jl ( see previous reply for listing) will write its output in .csv files like 3457_1.jpg.csv

It takes quite a while (like about 45 mins) to process the 45 images in one subdirectory

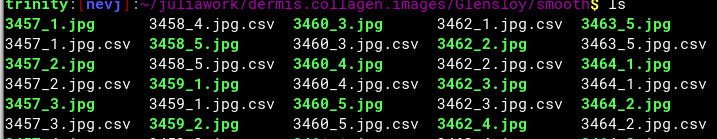

I end up with this

there is more…

One .csv file for every sheep and every microscope field.

Now I have to repeat that for each of the six subdirectories of images… experience has taught me to do things in manageable lumps.

I probably could have made the loop over images in Julia , instead of using a script. That may have been more efficient.

Next step: Combine all of these .CSV files into a dataframe, with appropriate codings for farm, smooth vs wrinkles, on vs between wrinkles, sheep number and microscope field number.

Then it would be ready for analysis.

I will try and do this dataframe and analysis work in Julia, but I may have to fall back to R.

Best workplan for this sort of task is to break it down into small steps… ‘divide and conquer’ works for programming and for data processing.