@nevj :

Hi again, ![]()

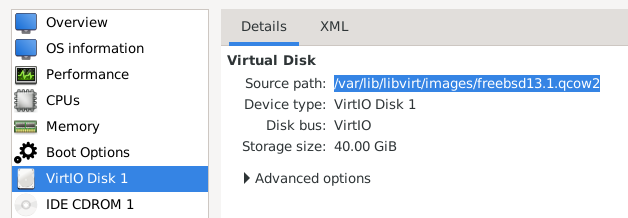

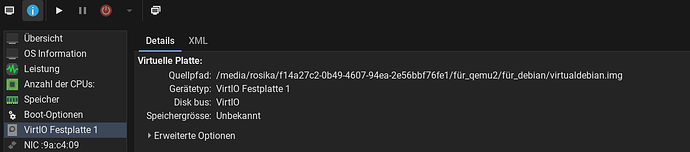

I just tried to enlarge Debian´s qcow2 image by 20G so that I could give the inline upgrade another try.

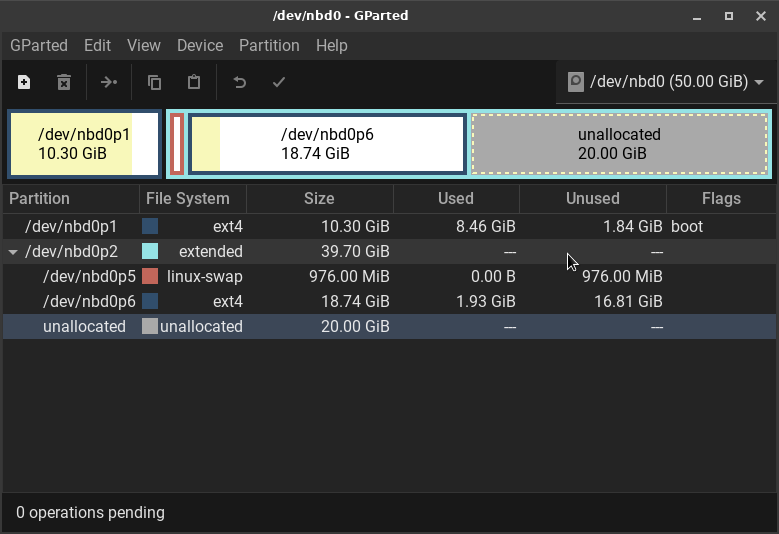

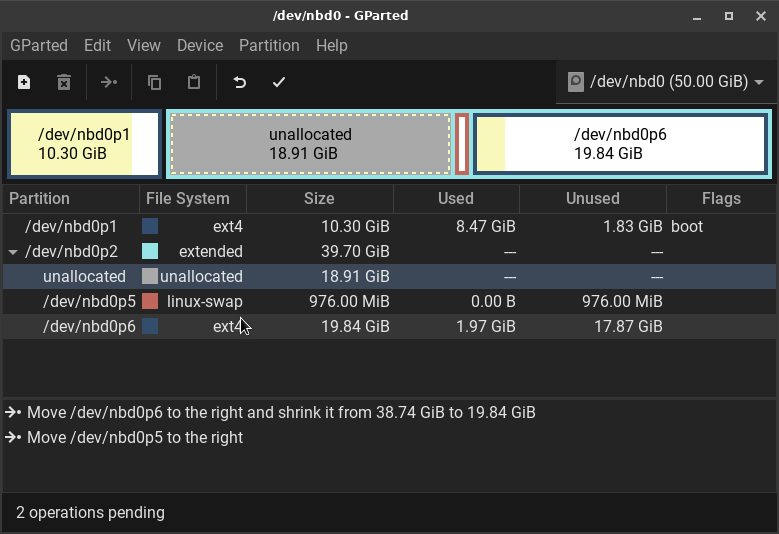

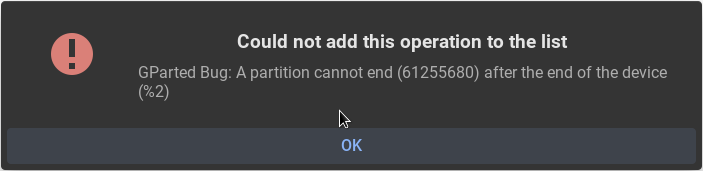

It seems to have failed though. ![]()

Here´s what I did:

qemu-img resize virtualdebian.img +20Gsudo modprobe nbd max_part=8sudo qemu-nbd -c /dev/nbd0 virtualdebian.imggparted /dev/nbd0- then I performed the resize action from within

gparted# see protocol below sudo qemu-nbd -d /dev/nbd0sudo rmmod nbd

So far so good, I thought. But I was still sceptical, as gparted still showed that 20GB of unallocated space.

But here´s the protocol of what gparted did:

GParted 1.3.1

configuration --enable-libparted-dmraid --enable-online-resize

libparted 3.4

========================================

Device: /dev/nbd0

Model: Unknown

Serial:

Sector size: 512

Total sectors: 104857600

Heads: 255

Sectors/track: 2

Cylinders: 205603

Partition table: msdos

Partition Type Start End Flags Partition Name File System Label Mount Point

/dev/nbd0p1 Primary 2048 21606399 boot ext4

/dev/nbd0p2 Extended 21608446 62912511 extended

/dev/nbd0p5 Logical 21608448 23607295 linux-swap

/dev/nbd0p6 Logical 23609344 62912511 ext4

========================================

Grow /dev/nbd0p2 from 19.70 GiB to 39.70 GiB 00:00:01 ( SUCCESS )

calibrate /dev/nbd0p2 00:00:00 ( SUCCESS )

path: /dev/nbd0p2 (partition)

start: 21608446

end: 62912511

size: 41304066 (19.70 GiB)

grow partition from 19.70 GiB to 39.70 GiB 00:00:01 ( SUCCESS )

old start: 21608446

old end: 62912511

old size: 41304066 (19.70 GiB)

new start: 21608446

new end: 104857599

new size: 83249154 (39.70 GiB)

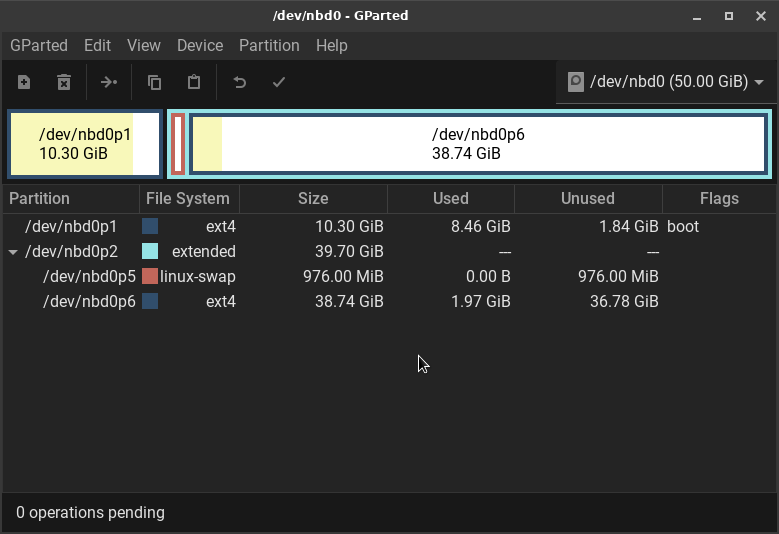

According to the protocol the action was successful.

I don´t quite get it. The disk size was more than 40GB before. So it should be over 60GB now.

Here´s what the Debian VM said after having started it:

rosika2@debian ~> df -h

Dateisystem Größe Benutzt Verf. Verw% Eingehängt auf

udev 475M 0 475M 0% /dev

tmpfs 99M 4,8M 95M 5% /run

/dev/vda1 11G 7,7G 1,9G 81% /

tmpfs 494M 144K 494M 1% /dev/shm

tmpfs 5,0M 4,0K 5,0M 1% /run/lock

tmpfs 494M 0 494M 0% /sys/fs/cgroup

/dev/loop0 114M 114M 0 100% /snap/core/13308

/dev/loop1 62M 62M 0 100% /snap/core20/1518

/dev/loop3 102M 102M 0 100% /snap/lxd/23155

/dev/loop2 387M 387M 0 100% /snap/anbox/213

/dev/vda6 19G 973M 17G 6% /home

mount-tag 35G 26G 7,3G 78% /mnt/mymount

tmpfs 99M 4,0K 99M 1% /run/user/114

tmpfs 99M 0 99M 0% /run/user/1000

/dev/vda1 is 11GB and /dev/vda6 is 19GB in size… ![]() .

.

I don´t know what might have gone wrong despite the “Success” messages provided by gparted.

Cheers from Rosika ![]()

P.S.:

However this is what sfdisk says:

rosika2@debian ~> sudo sfdisk -l

Disk /dev/vda: 50 GiB, 53687091200 bytes, 104857600 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x4f7a63d0

Device Boot Start End Sectors Size Id Type

/dev/vda1 * 2048 21606399 21604352 10,3G 83 Linux

/dev/vda2 21608446 104857599 83249154 39,7G 5 Extended

/dev/vda5 21608448 23607295 1998848 976M 82 Linux swap / Solaris

/dev/vda6 23609344 62912511 39303168 18,8G 83 Linux

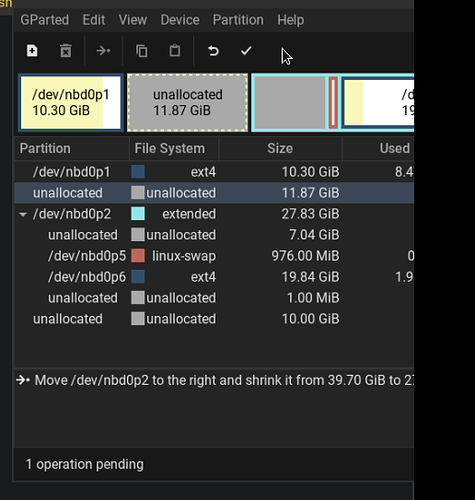

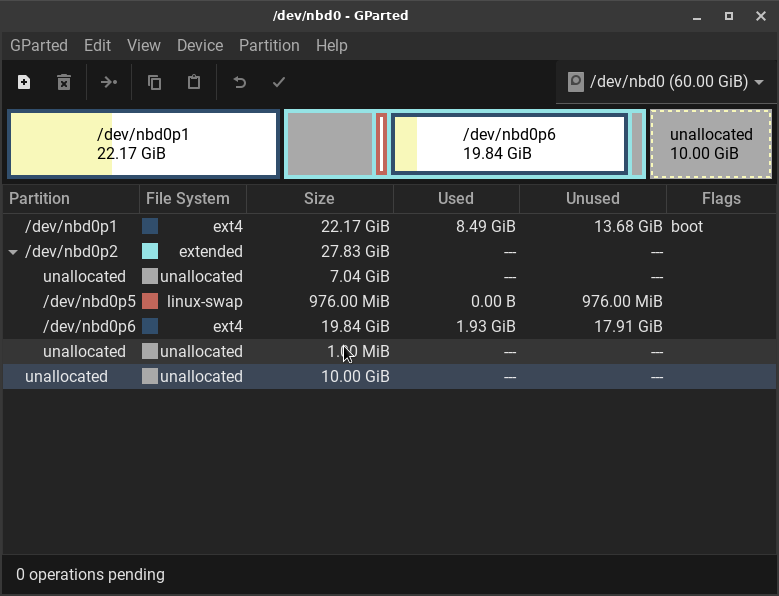

So /dev/vda2 is the one that has grown by 20GB.

But df -h doesn´t list it.

P.S.2:

Here´s what gparted says now: