Foist off, a little intro. I played with DOS batch files back in the early 80s so I’m not only out of practice but I’m switching script language from batch to bash. Yeah, this is my first foray into the world of bash after using Linux for just shy of 15 years.

I’m downloading, merging and organizing 11 hosts files from online sources into one. I’m sudo cat’ing these three files to write over the /etc/hosts: I have a copy of my original hosts header with several of my own additions for blocking, then I make a current date and time file prepended to that so I know when I’ve updated the hosts file. Then 11 source files from online are cat’ed and cleaned up. That assemblage is then my new hosts file. I’ve added a test section to determine if a copy of the original hosts file exists and if it doesn’t, one is made. Can’t be too careful! Just call me Sir Blockalot.

I’ve run into a problem I just can’t figure out. Using ‘sort --unique’ I end up with a tiny handful of duplicate lines, always pairs, no more than two of each duplicate. Naturally when all those files are downloaded there are many-several duplicates and many of them are more than just double copies. Not only is this is the first time I’ve tackled bash, but also a large mix of files from the GSW - Great Spider Web.

I’m assuming, since they’re downloaded, that the problem is caused by either MS or HTML formatting? If I’m right, how can I figure it out and then fix it? It’s either that or the size of the finished file, 565,000 lines give or take. I don’t think it’s the size, but I’ll toss that out as a possibility.

Here’s what one pair looks like.

0.0.0.0 example.com #[Spamdexing]

0.0.0.0 example.com #[Spamdexing]

I update the hosts files on my old laptop, my wife’s laptop and her 2in1 monthly and I decided to automate the process and just for spits and gurgles I decided to add all those other online hosts files. I’ve made the script file mostly generic such as using “${USER}” in the file paths so I can toss it onto all of our PCs without any editing of the script.

BTW, did you ever notice that there are two large industries where the customer is referred to as a user?

Drugs and software.

For all the more duplicates there are, it’s not mission critical. I could easily just ignore them, but it bugs me ya know. I’m a prefectionist - a poorfuctionist - I’d just like it tidy.

use “uniq” program on its own…

e.g.

cat file1 file2 file3 |sort -n |uniq > file4

I’ve never found a NIX that didn’t have uniq - it’s in BSD, SunOS, SCO and Linux (and probably IRIX and AIX too)…

Hmm. Just getting into bash and learning the commands on the fly. Nice to know that uniq is a stand alone command. Gonna kick it tomorrow and see how it goes.

Thanks

I had a very-very similar problem.

I use DNSMasq, and need to create a badhostlist, but I download the the list from multiple sites, so the duplicates are not a surprise.

The duplicates after sort -u were caused by an ^M (Windows new line?) character at the end of some lines.

tr -d ‘\015’ removes it just well, and then sort -u will not keep the duplicates.

My whole script is like that:

rm *.txt

wget -nc https://www.adawayapk.net/downloads/hostfiles/official/3_yoyohost.txt

wget -nc https://www.adawayapk.net/downloads/hostfiles/official/2_ad_servers.txt

wget -nc https://www.adawayapk.net/downloads/hostfiles/official/1_hosts.txt

wget -nc https://getadhell.com/standard-package.txt

wget -nc https://raw.githubusercontent.com/notracking/hosts-blocklists/master/hostnames.txt

wget -nc https://raw.githubusercontent.com/StevenBlack/hosts/master/hosts

mv hosts stevenhosts.txt

sed '/^#/d' 1_hosts.txt > collected_hosts.txt

sed '/^#/d' 2_ad_servers.txt >> collected_hosts.txt

sed '/^#/d' 3_yoyohost.txt >> collected_hosts.txt

sed '/^#/d' standard-package.txt >> collected_hosts.txt

sed '/^#/d' hostnames.txt >> collected_hosts.txt

sed '/^#/d' stevenhosts.txt >> collected_hosts.txt

cat collected_hosts.txt | awk '{print $2}' > collected_hosts_filtered.txt

cat badhostlist >> collected_hosts_filtered.txt

cat viberlist >> collected_hosts_filtered.txt

cat collected_hosts_filtered.txt | tr -d '\015'| sort -u | awk '{print $1}' > sortedlist.txt

sed '/^localhost/d' sortedlist.txt | grep "\." | awk '{print "0.0.0.0 ",$1;print "::0 ",$1}' > /etc/badhosts

This is placed on my server in /opt/gatherhosts/gather.sh, beside it seats a badhostlist, which I collected before, it contains really bad sites (porn sites, warez, and such), and viberlist, which contains a list of viber servers I know. You can leave these out, but I can share my own badhostlist if you wish

The output is a hosts file in /etc/badhosts, which is directly used by DNSMasq. It will contain the list of the unwanted sites with both IPv4 and IPv6.

Like this (just short example):

0.0.0.0 00-002.weebly.com

::0 00-002.weebly.com

0.0.0.0 0000bbffxzzzz900.000webhostapp.com

::0 0000bbffxzzzz900.000webhostapp.com

0.0.0.0 0.0.0.0.beeglivesex.com

::0 0.0.0.0.beeglivesex.com

there’s also the tiny package / binary “dos2unix” - which is as ancient as my time with UNIX (i.e. been using it at least 25 years)…

Available on Debian family distros :

sudo apt install dos2unix

And probably RH family :

sudo yum install dos2unix

Then :

dos2unix munted-microsoft-file.txt

Thanks, I wasn’t aware of dos2unix.

There are countless ways to do the same thing, probably sed would do it too.

I choosed tr because it seemed to me most natural thing for this task

Wouldn’t it be easier and better, if you would use software that handles this automatically for you? This sounds like putting effort in something that already has been done over and over.

Example:

Welp, tried unique, straight up and piped, didn’t do the trick.

I gave tr -d ‘\015’ a shot but it didn’t work either. I added dos2unix which did the trick, then since I wanted to make this portable to our other PCs I added an if d2u exits test with a download section if it doesn’t.

I see there are some other hosts sources I can steal and add, thanks. I’ll post the ones I use lower on.

But then I’d miss the fun and the challenge and the flexibility and the learning and the fun … and the fun.

I tried sed every which way I could think of but it failed, then I searched and found that sed doesn’t have the ability at all. Bummer.

I hadn’t figured on adding anything for IPv6 yet, but since it’s made it around the corner, I might as well. Here’s my list of source files minus the ones from Steven Black which you had;

http://hostsfile.mine.nu/Hosts.txt

https://curben.gitlab.io/malware-filter/urlhaus-filter-hosts.txt

https://raw.githubusercontent.com/AdAway/adaway.github.io/master/hosts.txt

https://raw.githubusercontent.com/FadeMind/hosts.extras/master/add.2o7Net/hosts

https://raw.githubusercontent.com/FadeMind/hosts.extras/master/add.Spam/hosts

https://raw.githubusercontent.com/hoshsadiq/adblock-nocoin-list/master/hosts.txt

https://raw.githubusercontent.com/shreyasminocha/shady-hosts/main/hosts

https://raw.githubusercontent.com/Ultimate-Hosts-Blacklist/Ultimate.Hosts.Blacklist/master/hosts/hosts0

https://raw.githubusercontent.com/Ultimate-Hosts-Blacklist/Ultimate.Hosts.Blacklist/master/hosts/hosts1

https://winhelp2002.mvps.org/hosts.txt

“unique” or “uniq”?

The command is “uniq”…

If you did use “uniq” - there’s a slight chance some of the strings are different, so one string that looks much like another, has a slight difference, and thus you get both strings…

uniq vs. unique - I had to look at my notes to be sure which one was the sort switch and which one was the full command. I had them right in the script. BTW I like using the -xv switch to debug. That helps out a load when it tosses out an error!

I have the majority of microsoft.com’s sites blocked too because of their intrusive and unethical behavior.

Mouse, touchpad, swipe and keystroke recorders affiliated with Microsoft and required in order to use some of their products such as Teams. Such activities are what viruses do, not reputable businesses

List sources;

I tried to volunteer with the American Red Cross last fall and had to drop out. Their training was partially on Microsoft Teams and since I’ve got the majority of their domains blocked I wasn’t able to use Teams. I decided instead of bowing to ‘the man’ I’d bow out of the Red Cross. A warning to those using Teams, it records everything for Microsoft and for employers who have an employee or affiliate join their account meetings. Funny, but according to most state’s laws, you’re not allowed to record a phone call without clearly informing those on other end, yet Microsoft and all monitoring account holders for Teams can record audio and video without so much as a hint that they’re doing so.

Is Microsoft above the law? Damned straight!

Here’s a handy-dandy online tool I use to check sites for their behavior;

No? What about sed -i s/\r$// filename ?

I would also remove leading and trailing white space (tabs and spaces):

sed -i s/^\s*// filename

sed -i s/\s*$// filename

Checked my notes and yep, tried that one and the white spaces are long gone.

I don’t know what you did exactly, but sed s/\r// reliably removes the CR character. This is according to the manual and I’ve used a thousand times when processing CSV files.

@FBClark Thank you very much!

I incorporated your sources to my own gather script.

At the end I have checked, and have no duplicate of zhirok.com for exmple.

Maybe I should comment, what my script does, so in a nutshell (I also have modified it a bit since with many new sources it’s now more clear):

#!/bin/bash

download()

{

wget -O hosts.txt $1

#download into hosts.txt the file with overwrite

sed ‘/^#/d’ hosts.txt >> collected_hosts.txt

#remove the comment lines (starting with #) and append to the collected list

}

rm *.txt

#clean start

touch collected_hosts.txt

#create empty collected list

download https://www.adawayapk.net/downloads/hostfiles/official/3_yoyohost.txt

#download, remove comments, append, as in the above function

download https://www.adawayapk.net/downloads/hostfiles/official/2_ad_servers.txt

download https://www.adawayapk.net/downloads/hostfiles/official/1_hosts.txt

download https://getadhell.com/standard-package.txt

download https://raw.githubusercontent.com/notracking/hosts-blocklists/master/hostnames.txt

download https://raw.githubusercontent.com/StevenBlack/hosts/master/hosts

download http://hostsfile.mine.nu/Hosts.txt

download https://curben.gitlab.io/malware-filter/urlhaus-filter-hosts.txt

download https://raw.githubusercontent.com/AdAway/adaway.github.io/master/hosts.txt

download https://raw.githubusercontent.com/FadeMind/hosts.extras/master/add.2o7Net/hosts

download https://raw.githubusercontent.com/FadeMind/hosts.extras/master/add.Spam/hosts

download https://raw.githubusercontent.com/hoshsadiq/adblock-nocoin-list/master/hosts.txt

download https://raw.githubusercontent.com/shreyasminocha/shady-hosts/main/hosts

download https://raw.githubusercontent.com/Ultimate-Hosts-Blacklist/Ultimate.Hosts.Blacklist/master/hosts/hosts0

download https://raw.githubusercontent.com/Ultimate-Hosts-Blacklist/Ultimate.Hosts.Blacklist/master/hosts/hosts1

download https://winhelp2002.mvps.org/hosts.txt

cat collected_hosts.txt | awk ‘{print $2}’ > collected_hosts_filtered.txt

#some has edited host file to resolve to 127.0.0.1, others 0.0.0.0

#I want 0.0.0.0 as well as ::0 (ipv6) so I keep only the host names (second column)

#and place it ina filtered list

cat badhostlist >> collected_hosts_filtered.txt

#append my own before collected list

cat viberlist >> collected_hosts_filtered.txt

#append viber servers list

cat collected_hosts_filtered.txt | tr -d ‘\015’| sort -u | awk ‘{print $1}’ > sortedlist.txt

#cat prints the whole list, pipes into tr, which filters for ^M, then it is piped into sort,

#which sorts and drops duplicates, awk keeps only first column, so some comments on the end of

#lines will be removed and the thing gets into sortedlist.txt

sed ‘/^localhost/d’ sortedlist.txt | grep “.” | awk ‘{print "0.0.0.0 ",$1;print "::0 ",$1}’ > /etc/badhosts

#sed removes lines starting with localhost, pipes into grep which drops lines tha do not have a dot

#(those are hostnames I don’t want to block, such as ip6-allnodes, and similar)

#grep pipes into awk, which prints 2 rows for the hostname, one starting with 0.0.0.0 for ipv4,

#and another starting with ::0 for ipv6

For me that seems to work perfectly, but keep in mind, that this is not systems hosts file, but a hosts file for DNSMasq to look it up first, before it sends query to the upstream DNS server.

So this does not contain such entries, a systems hostfile should contain.

It’s so interesting that tr works for you but not for me. Another odd one is that I got mine working exactly the way I want it to on my laptop and it worked like a charm on my wife’s 2in1, but when I ran it on my wife’s old Toshiba laptop the dos2unix install scrambled plus I got an initial ‘file not found’ error that resolved itself the second time through.

All three are on LMDE4 so there’s no OS or even version differences, only hardware. Bash should be uniform, right?

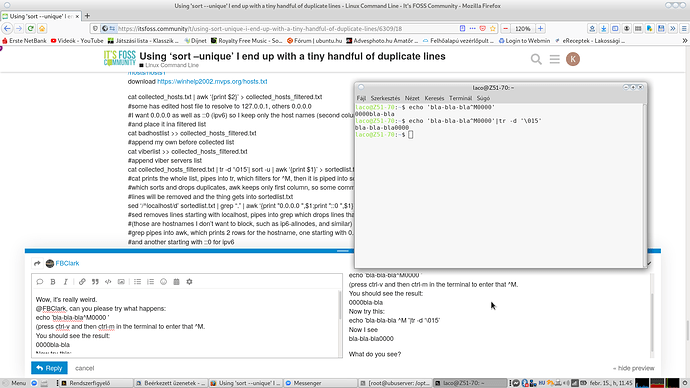

Wow, it’s really weird.

@FBClark, can you please try what happens:

echo ‘bla-bla-bla^M0000’

(press ctrl-v and then ctrl-m in the terminal to enter that ^M.

You should see the result:

0000bla-bla

Now try this:

echo ‘bla-bla-bla ^M0000’|tr -d ‘\015’

Now I see

bla-bla-bla0000

What do you see?