I put to gether this “playlist”, so you get the whole Louis Rossmann view on what Apple is pulling off, right now.

Apple is going down a bad road but the reason they are getting backlash for it is because they told people what they are doing. Funny thing is, they were already doing things.

I think most people would agree that any measures to control the flood of child pornography are acceptable… I would agree on that as well. However I am also convinced that it would be easy to change the algorithms and then start searching for…whatever they feel like searching for. We have to accept the fact that we are being spied on from all over the place, that is going to get much much worse.

The issues are numerous, though. First of all, anyone who has at least a bit experience with life, e.g. anyone who is at least 20 years old, knows what companies do and what will happen here. They lie, they screw over and use every single loop hole available to benefit themselves, instead of the customer/user. Most of the time that behaviour is understandable, but too often it’s going too far. In this situation, they are trying to seem honourable, etc. when in reality, they try to justify a tool straight from 1984 by mentioning “child abuse” and that’s it. They think, we are so stupid, we really believe, they will strictly only use it for child abuse. No, that’s not true and everyone with a bit life experience and half a brain knows that it won’t stay like that. If such algorithm starts running on iPhones today, then we will see such algorithms on every new phone in 10 years, though not searching for child porn, but for anything they need for marketing to and exploiting the user, possibly to even deeply manipulate the user of the device.

Secondly, all those “any measure is justified, BECAUSE CHILDREN” morons (especially in the USA, I hate American people when the topic in discussion is children, because they always think children are the stupidest beings on earth, when they have 40 year old junkies snorting huge amounts of coke every single day) always accuse you of being pro child abuse or porn, just because you say “no” to a single measure, that deeply cuts into the user’s privacy. This is pure propaganda and professional manipulation tactics, nothing less.

Thirdly, all those “any measure is justified, BECAUSE CHILDREN” morons do not realise that they are basically searching for like 0.000001% of the population, by destroying the privacy of 99% of the other people, bit by bit, over the years. This is not fine. No, this is not fine, even when we are talking about bEcAuSe cHiiLdReN…!

Fourthly, what’s the point of saving (are there any statistics on how many children were actually saved because of CSAM? I doubt the number is that big or in any way noteworthy) a couple of children, when in the end we have manifested 1984 in reality and every human is spied upon to the bone, like at an airport, but in their whole life. What’s the point of getting children and bringing them up, if the world is ruined anyway? Children do not deserve to grow up in such a world.

Fifthly, as can be seen in the Github project I linked in the OP, it’s not yet clear if the feature can clearly enough distinguish between normal child pictures and actual illegal pictures of children. Apple denies this, however it’s not proven it actually works that well.

Sixthly, they are right now working on reverse engineering pictures from the algorithm. Basically, it could theoretically be possible to get illegal child pictures from this new “feature” on your phone, without ever being any kind of child abuser or exploiter.

Seventhly, there is no absolute guarantee, that the CSAM databank has literally only child abuse picture hashes. These are only hashes. What if North Korea wants a Japanese guy dead? They just add a hash of this Japanese guy’s dog to the database and boom, he is considered a child abuser and will never return home, ever again. Thanks Apple, for supporting dictators and anti-democratic practices.

A picture from one of the Github issues:

Privacy is important. Freedom is important. All the important things have unrecognisable price tags attached to them. But everyone with half a brain knows, they cost. They cost a lot. We need these human rights to exist and be enforced, even if that means, not every person on earth has the best life ever.

You say porn.

I say - Im holding my little child trying to put on the first diaper.

Next thing you know - some over the top activist at Apple calls the police and you are covered in baby crap trying to answer the door.

We all look at the Jetsons and say - that is awesome. The reality of life between what could have been and what is when it comes to what technology can do is so vast that there isnt a valley big enough in our solar system to represent it. Just like with health care who has blown all credibility, tech companies are in the exact same boat. You cant tell me you are scanning my photos and texts for earmarks of x,y,z when you have said lesser things in the past and done far more and far worse and lied about it.

First hand example from a second party.

Two women were texting about a situation about one womans kid going back to school this week. She texted something about tater-tots from Sonic. She never goes there - is in a new state … saw it and stopped and ate. She sent a text and an image of herself eating to her friend. Her friend posted two hours later all the tater-tot ads on her facebook, instagram, tic-toc and pinterest pages. Only one had an iphone. Only one had all this stuff happen. I told my friend about it. I told him the story in person. I texted him later about certain things. He saw nothing - same as me. He texted/messaged his wife (iphone) and she had those things on her pinterest and instagram.

All that to say - it doesnt matter (to me) what apple or google says they are doing - they cant be trusted to do just that. They are always doing much more.

This is a very great example and a huge problem since years. The only thing worse than that in these situations is that most people don’t even get that or when they understand what is happening, they think it’s “normal”. Absolutely disgusting.

Wait… hang on…

→ “NORMAL”

All caps. NORMAL!

That means its more normal than normal - to be a natural thing  HAHA

HAHA

I would like to add some thoughts:

-

Please stop talking about child pornography. There’s no such thing. It’s child abuse. Full stop.

-

There is no commercial market for child abuse material to speak of. Professional prosecutors all agree on the fact that the trade of such material is based on exchange. There is a certain amount of material floating around on the net (often created in the 90s after the crumbling down of institutional authority in Eastern Europe) which appears on many social networks. New abuse material hardly leaves the enclosed circles. This makes investigation of these crimes a very complicated task, as you have to gain the trust of the criminal network to get access to new material, usually by providing new and unseen own material.

This is the same problem investigators face with any kind of organized crime: drug dealing, terrorism, human trafficking, insider trading and corruption (the latter three are usually hardly investigated at all, despite the enormous damage they cause). -

Due to the difficulty to investigate such type of crime and the unwillingness of politicians to increase social care, child protection services and expand police and judicial resources (it’s all about money), the same politicians are tempted by magical solutions, i.e. technical surveillance combined with the wonders of AI, which principles none of them really understands. Clever marketing by the companies providing technical solutions leads people to grossly overestimate their abilities and underestimate their dangers.

-

The rightful feeling of horror and fear, associated with child abuse and terrorism, makes people willing to accept almost any kind of privacy invasion, opening the door to total surveillance.

-

I don’t trust any government or company to limit their curiosity to the issues they are supposed to. Once, there’s an open door, they will always start to broaden their snooping around. I also don’t trust them to keep their technology and data safe enough. The people employed in these institutions can be bribed or pursue their own agenda. It’s a known fact that police officers really often use their access to restricted databases for stalking purposes.

-

I don’t trust the security of technical solutions. If you build a small backdoor for the good guys, it automatically becomes a huge one for the bad guys. The US forces’ biometric database now in the hands of the Taliban is an excellent example.

False positives are also a huge issue. It has already been proven that Apple’s hashing algorithm will create the same hashes for semantically different images. This is not a problem of the particular algorithm, but a general one: Such kind of algorithm needs to be insensitive to cropping and filtering. The set of hash keys also must be considerably smaller than the set of all images.

Of course, authorities and companies will assure that, if you were falsely identified by the algorithm, you will have nothing to fear. I don’t believe them a single word. -

I do believe, child abuse and imagery created of such abuse are a huge and serious problem, which deserves to be tackled in the proper manner: by providing law enforcement with the necessary resources and manpower and above all by better child care in communities and institutions, namely kindergartens and schools. Technical magic will not cure the underlying problems in society.

At best, it will spot a few symptoms, giving politicians and corporations ease of mind, so they can avoid spending money on the underlying problems.

At worst, it will create a surveillance infrastructure leading to censorship, prosecution of innocents and opening private data to criminals, leading to blackmail and other crimes.

A little more than 2¢

What a great assessment! Perfect! Bravo! ![]()

![]()

I fully agree a 100%. It’s in my opinion a summary of the full analysis of the situation we have right now.

Let me just respond to a little part of this great explanation.

This is one of the main issues that privacy advocates usually complain about. Not only in this situation, but every time there is an algorithm (or humans, whatever) doing any sort of stuff, where you have to find the “bad guys”.

In judicial systems of Western Democratic Societies, there is a very important rule:

Innocent until proven guilty.

It is based on the philosophical opinion, that it’s better to have 10 “bad guys” running free, than just a single person being imprisoned wrongfully.

I strongly agree with that opinion. Nobody should go to prison or be otherwise punished for something they have never done. It’s like destroying someone’s life, randomly.

Now, if you have an algorithm or a system or a group of people or whatever software/hardware/humanware, that have to “decide” if something is considered e.g. illegal or not, then you can, at this time in our progress of technology, never prove, that it is impossible to get false positives. As long as this possibility exists, people may go to prison for doing nothing wrong.

Usually, I would agree that having an extremely low percentage of false positives might be acceptable. However, in this specific spicy topic, I know that just wrongfully convicting a single person would already be too much. If you are a supposed child abuser and go to prison for that, literally everyone hates you (and that’s right, as long as you are not convicted wrongfully), the normal society, the prison inmates, the guards, the judges, the lawyers… Literally everyone hates you.

So, if only a single person is convicted wrongfully because of an algorithm and some dude who is paid 5 bucks an hour to check if the picture is actually abusive accidentally clicks on “yes” or something, then it’s already too much, because you destroyed an entire life of a random person. In my opinion, you might as well execute that person. People who are innocent are probably more prone to not being able to deal with the consequences for something they have not done.

I also highly doubt the actual and rightful conviction rate of actual abusers will be that high or if there will be one, at all. If there are abusers smart enough to use the dorknet, then they are also smart enough to not save their material in any cloud or on their iPhones.

Additionally, reality is not black & white. It’s not like every child abuse picture on earth is showing a naked child or something worse. Some are just showing a kid doing nothing. It’s still child abuse, because the child does not know what will happen next and perhaps has already fear written in their face. Imagine such a picture. There is a kid, fully dressed, standing in front of a wall, looking into the camera of a disgusting stranger. The kid is obviously stressed and has fear written into their face. Is this child abuse? In my opinion, yes. However, does this count as a child abuse picture from the perspective of the algorithm or the humans behind it? Not, if it’s not in the database. Not, if a random 5 bucks dude looks at it at 22:55 o’clock when his shift ends at 23 o’clock.

On the other side, imagine you made a picture of your child in the bath or whatever. Literally every person I met who also has children has such photos of their children. I think pretty much all parents have such photos of their children. It’s not in the CSAM database, so it’s safe, right? Well, but it’s still showing a naked child. So, is it really safe? Is it really safe in 10 years? 20 years? 30 years? Maybe in 20 years, you are a child abuser for having such a picture on your phone, because maybe they extended their algorithm to find material that is not part of the CSAM databank. Who knows. Nobody knows. Just speculation on one hand, but on the other hand, we all know, they won’t stick the algorithm to only what it is supposed to do now. They will extend it at some point, to search for more. Everyone (with half a brain cell) knows this, at least.

The road to hell is paved with good intentions.

That was written really well Mina!! I have to agree with you on everything. Changing “child pornography” into “child abuse” was very good …I would like to see that get done everywhere. We will never know how many children are being abused but I am willing to bet that everyone would be horrified if the truth ever came out.

@esc, I reckon most of us agree on your feelings about child abuse, and yet believe that prevention and prosecution should be carried out within the rule of law.

You did neither address the concerns expressed by users in this thread, nor did you tell us what kind of measures you propose.

What do you think of the idea of installing CCTV in every room of every premise in the country, and if a trained AI could trigger an immediate police search of the location?

If you’re innocent, you have nothing to fear, right?

If this goes too far, you agree that there is a conflict of rights and a line should be drawn somewhere. The question is “where?”.

As I said before, proper staffing and training of law enforcement, children’s services and social care seems to me more effective in the goal of protecting the vulnerable than outsourcing crowd surveillance to private companies and it doesn’t create any conflicts with other human rights.

My personal view is that the fight against child abuse in internet has already been lost. According to internet watch foundation the rise in pictures containing child abusement has risen since Covid-19 by 50% and assumably the percentual rise of actual abuse has risen as well. To me that doesn’t sound like any measures that have been taken to protect children have worked at all. I also see a huge need to inform children about possible dangers that they themselves are creating. Apparently the biggest source of new pictures comes from children themselves. According to a recent report it is now “chic” (hope that’s a word that is understood from native English speakers?) for children to photograph themselves and then pass them on to other children. In a recent documentary a schools director invited parents to an evening discussion and then proceded to show them what they had found on confiscated mobil phones. Most of the parents couldn’t look at the PowerPoint file because the contents were so disturbing. Most of the pictures shown were taken by the children themselves but also many of adults and also incredibly violent video clips showing terrible things. I have no idea at all what kind of a solution can be implemented to take care of that.

On a final note: I have also read that many authorities and people connected to protecting children have prognosed that child abusement may very well become more acceptable in future times. Brave new world.

As important as the topic is, this forum is not the place to discuss it in all its aspects. As far as aspects of technology and its legislation are concerned, I consider the exchange of arguments valid. However, we should see that it doesn’t go completely out of hand. Specific UK practices are, in my opinion, beyond the scope of what we should discuss here.

Having two relatively small children of my own, I do appreciate the discussion and any ideas to protect them and other, probably more vulnerable, children from dangers on- and offline. The question of grooming that you mentioned is actually of utmost importance, as it happens mostly out of sight of parents and care takers.

In that aspect, I’d like to expand on the prior comment

Education of children, care takers and educators is key to protect potential victims.

My views might be ethnocentric, but there is hardly anything that can be done outside jurisdictions where legal principles and human rights are respected and where there are institutions to enforce them.

To summarize: I don’t believe in purely technical solutions to social problems. People and politicians are very easily fooled into believing that technology can magically solve problems without spending the necessary amount of money and effort. This pretext creates social consent to curtail other human rights with all its (un?)intended consequences.

I’m not willing to accept magical thinking as base for political decisions, be it the faith in divine powers or technology, which most decision-makers hardly grasp to understand.

I agree with a lot that you have written… However I will stick with my point of you that the fight has already been lost. The only thing that seems to work is publicity regarding the abusers and the shame that is involved (personally I believe the shame cannot be high enough) however this leads to a lot of suicides and does not improve the situation ( conclusively proved in Thailand). In Pakistan child abusers are publicly executed ( which is of course terrible) but it has lead to an increase in abusement.

Your link to the internet article contains following sentence:

<The investigation has made practical <recommendations that minimise the opportunities for <abuse facilitated by the internet in the future…

That sounds quite positive but in fact it isn’t. GCHQ in Cheltenham/UK used to have a large taskforce regarding child trafficking. This force has been reduced to a “let’s make a new flyer” to inform people. If you compare what GCHQ is doing to the Bundesnachrichtendienst in Wiesbaden/Germany you can immediately see that the UK has accepted that it has lost the battle.

That in the thread no one has spoken up for the children is not correct. Mina spoke up for the kids and I did as well.

Wow…you give up very easily?

Discussions should be controversial… that’s how we learn new things. As long as people remain polite to one another a healthy discussion is profitable for all who take part in it?

I think this summarises the view you have on this topic. This view I strongly disagree with.

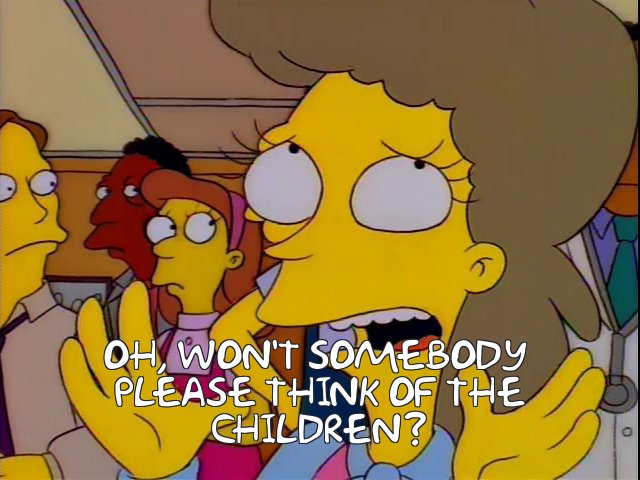

https://c.tenor.com/95aAWbLW8yAAAAAC/think-of-the-children-wont-somebody-please.gif

The user @esc asked me in to delete his account, and I complied with his wish.

This also means that his posts in this thread are now hidden (but can still be viewed). However, it seems, he had not much interest in discussing his concerns or to provide any kind of new proposal.

First, you wish for a police state and basically North Korea 2, where everyone suffers, EVEN CHILDREN, because children growing up in North Korea are lost their whole life, without a chance to escape. The only chance for them to escape is go to South Korea. However, they never forget what has been done in North Korea (2).

Then, you want to escape this dilemma, because people, rightfully, respond to your extreme proposition with some sense. Isn’t that ironic?

Guess what, there is no way to truly escape from a necrocracy.

Well I feel a bit responsible for him asking to have his account deleted… but to be quite honest…his decision and it sure won’t keep me awake at night.